Natural Language Search

Note: Natural language search is currently enabled by default for all datasets created after March 1, 2022 and only works for images, for now. If you created your dataset before this date, your dataset will eventually be able to enable natural language search since we are currently working on migrating datasets created before this date to be natural language search compatible through continuous indexing. Reach out to [email protected] if you'd like to learn more.

Natural language search enables you to search for images using natural English text queries, such as searching for “a pedestrian wearing a suit” or “a chaotic intersection" to get the matching images. This works on all datasets regardless of what kinds of images they have, though the quality of the results may vary depending on the images. Natural language search is an alternative to structured querying, where instead of using annotations, autotags, or predictions to search your dataset, you can use natural English.

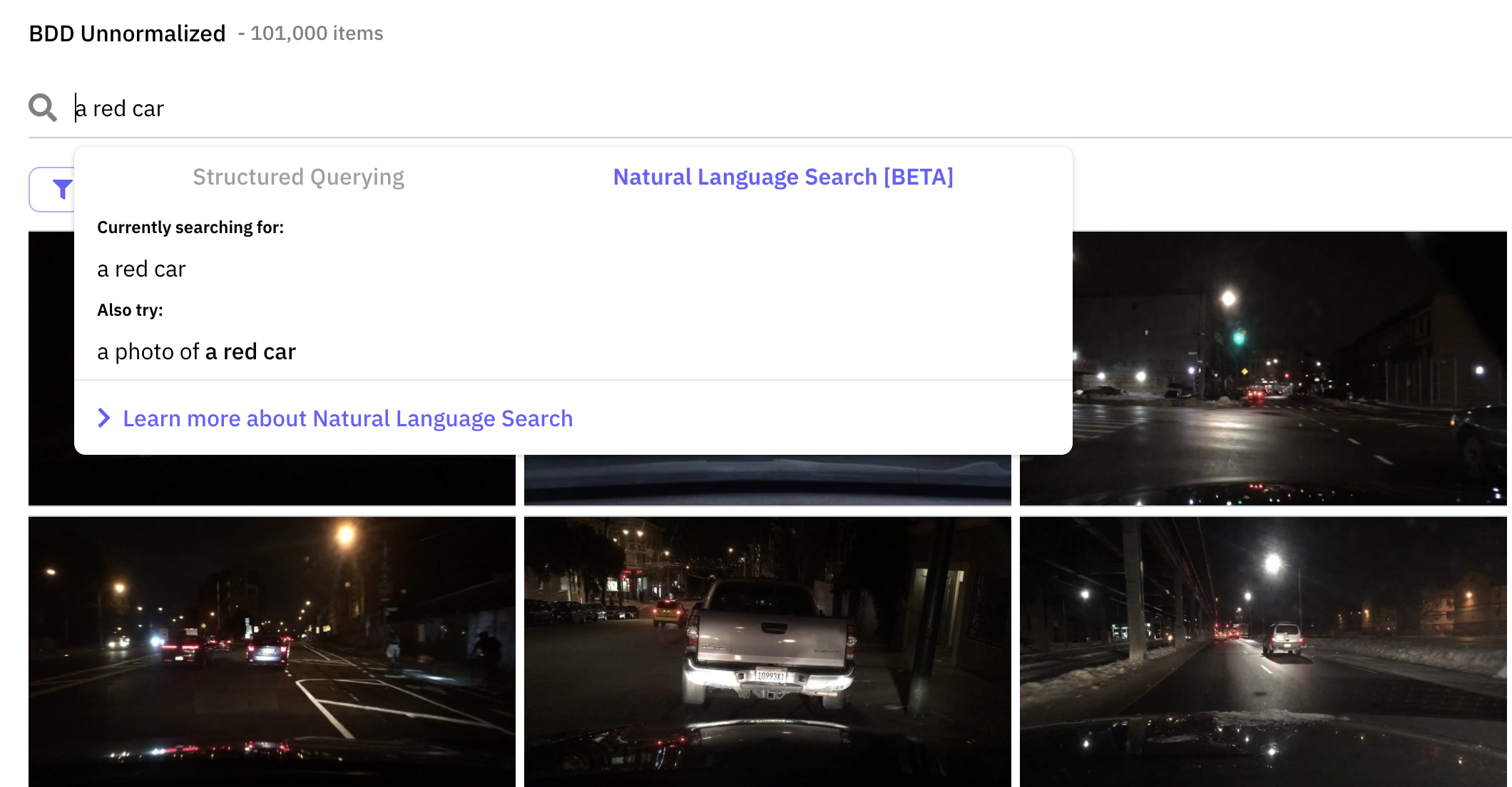

To use natural language search, simply start typing an English text query into the dataset query bar and it should automatically recognize and switch to natural language search from structured querying. You can also click on "Natural Language Search" in the query bar dropdown to manually toggle whether to use natural language search.

Using natural language search from the grid view dashboard.

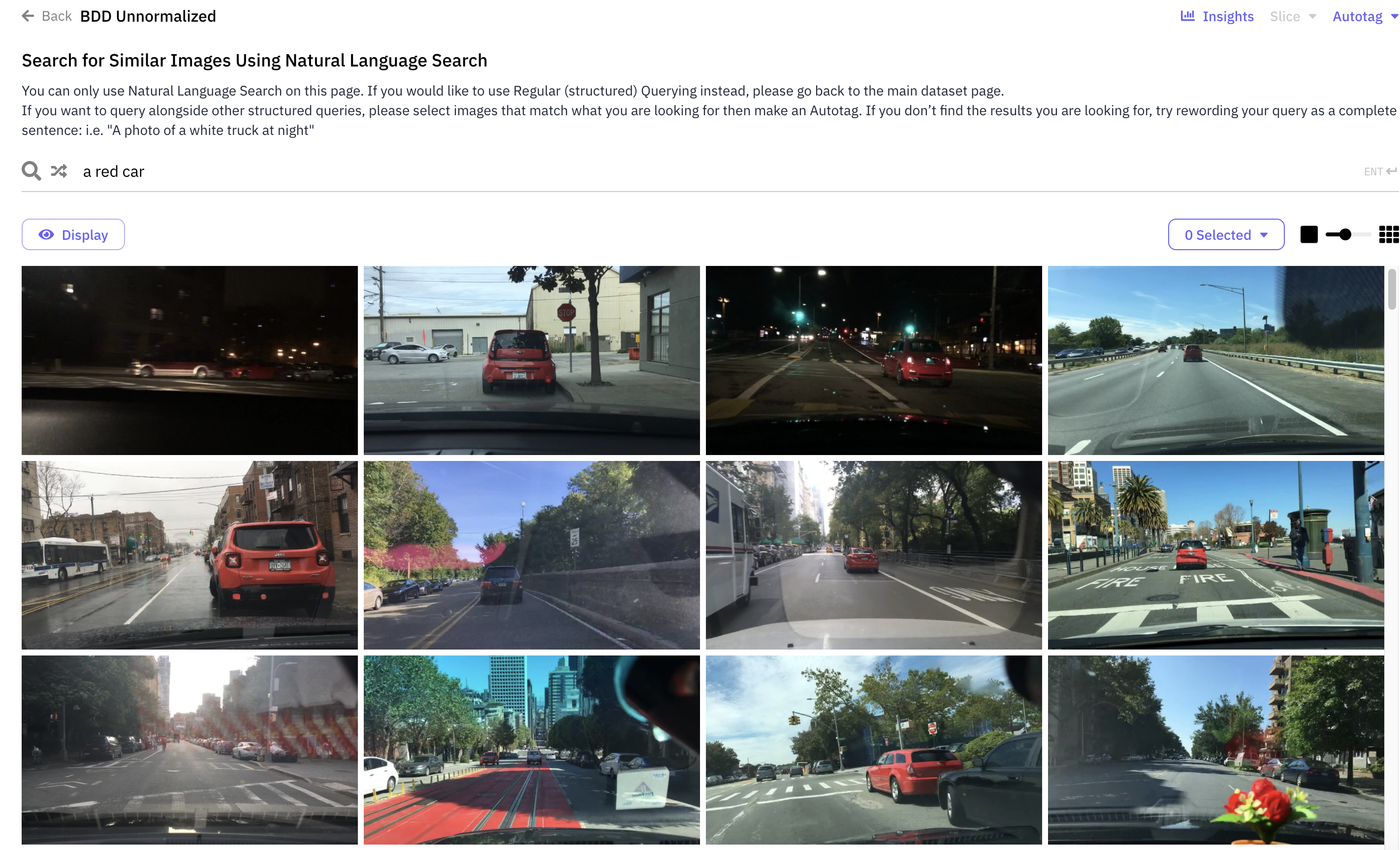

Then, simply press enter to perform the search. This will bring up a search results page showing the matching images corresponding to your text query.

Results page of natural language search.

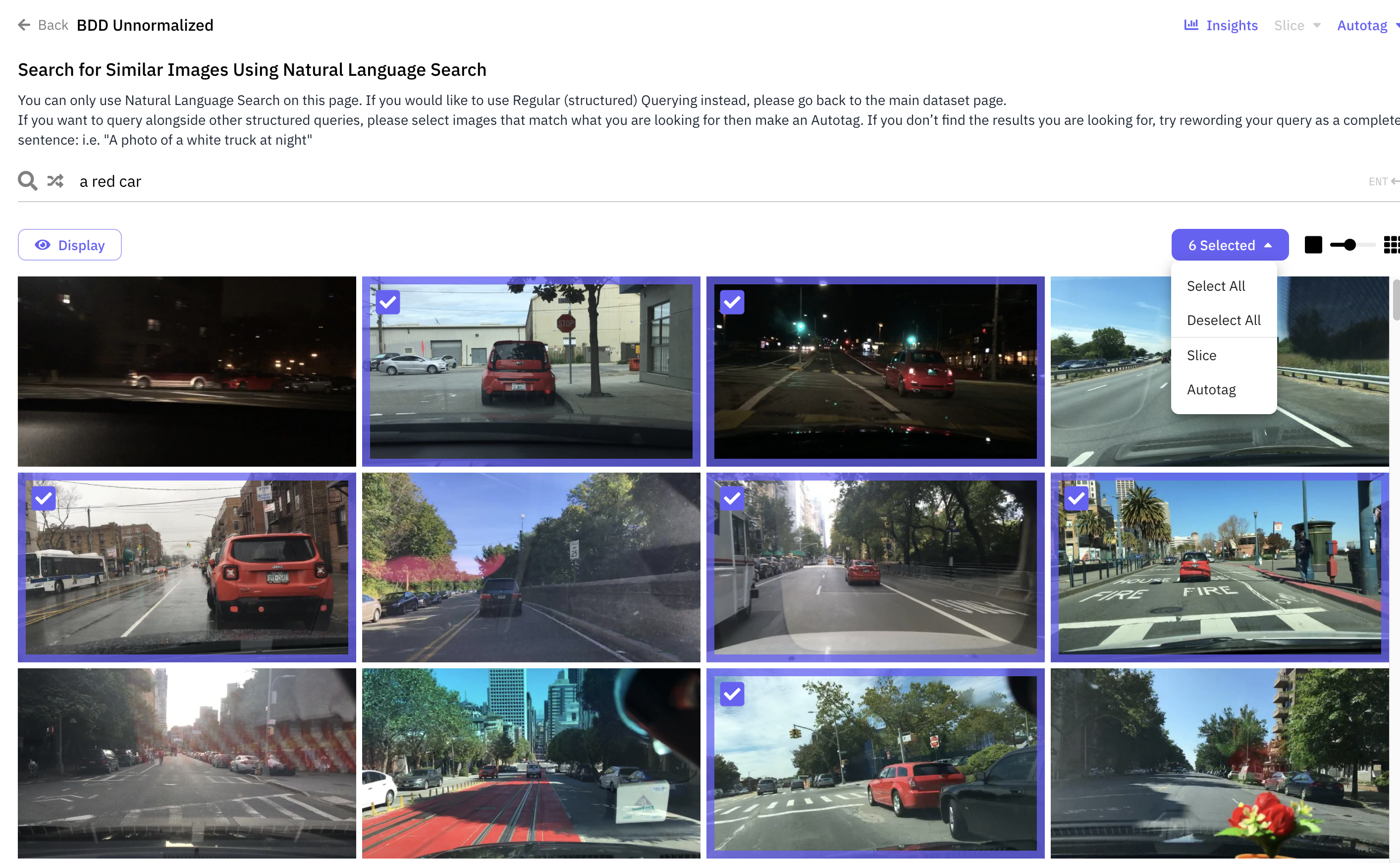

From here, you can browse these images, create an Autotag, or make another natural language search. If you would like to use Regular (structured) Querying instead, please go back to the main dataset page. If you want to query alongside other structured queries, please select images that match what you are looking for then make an Autotag. If you don’t find the results you are looking for, try rewording your query as a complete sentence: i.e. "A photo of a white truck at night"

Selecting images on results page of natural language search to create an Autotag.

An example of searching for "a red car" in the Berkeley Deep Drive dataset is here: [Berkeley Deep Drive Natural Language Search Example] (https://dashboard.scale.com/nucleus/ds_bwkezj6g5c4g05gqp1eg?display=similarity_text&similar_text_query=a+red+car)

To generate the embeddings used in natural language search, we use OpenAI CLIP, which "is trained on a wide variety of images with a wide variety of natural language supervision that’s abundantly available on the internet." As such, natural language search may return higher quality results for datasets whose images better match the images that CLIP was trained on, and there may be some slight biases in the results. Additionally, because of how CLIP works, it is often better to query with a complete, more descriptive phrase like "a photo of a white truck" rather than just "white truck." There is quite a bit of room for prompt engineering here, so we encourage you to try different variations of wording your query to see which of them returns the best results!

For a more powerful visual similarity tool, continue reading to learn how to use Nucleus Autotag .

Updated about 2 years ago