Segmentation Annotations

Nucleus supports instance, semantic, and panoptic segmentation on image datasets. We are currently building out support for video datasets.

In this guide, we'll use the Python SDK to construct segmentation annotations. A segmentation annotation object in Nucleus has four components:

- URL of the mask PNG, with each pixel being a

uint8ranging from 0-255 - List of

Segmentsmapping eachuint8value of the mask to an instance/class - Reference ID of the item to which to apply the annotation

- Annotation ID, an optional unique (per dataset item) key to refer to the annotation

Segmentation Masks

Nucleus expects masks to be image height by image width int8 arrays saved in PNG format. Each pixel value should range from [0, N), where N is the number of possible instances (for panoptic and instance segmentation) or classes (for semantic segmentation).

Your segmentation masks must be accessible to Scale via remote URL (e.g. s3://, gs://, signed URL). Currently, Nucleus only supports uploading masks via remote URL, but local file uploads will be available soon!

Updating an existing segmentation mask

Nucleus requires that each dataset item has exactly one ground truth segmentation mask. You can replace an existing segmentation mask with a new one by setting

update=Truewhen callingDataset.annotate, otherwise the new mask will be ignored for any items that already have one.

For more information, check out our Python SDK reference for SegmentationAnnotations.

Segments

SegmentsAfter constructing mask PNGs with corresponding pixel values, the next step is to tell Nucleus how to map pixel values to instances/classes in your taxonomy.

The Nucleus API uses Segments to define this mapping from pixel value to instance/class. This provides the flexibility to work with panoptic, instance, or semantic segmentation masks as needed.

Concretely, you will need a Segment for each unique pixel value in the mask. This means one Segment per instance for panoptic and instance segmentation, or per class for semantic segmentation.

Each Segment can also house arbitrary key-value metadata.

For more information, check out our Python SDK reference for Segments.

Example

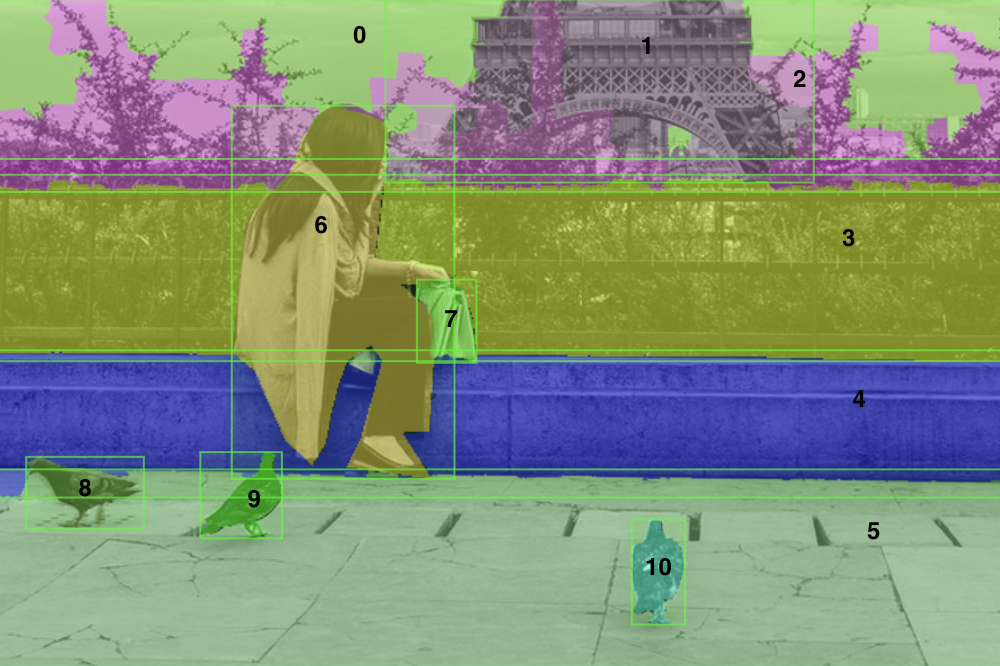

Suppose we are applying a segmentation mask to the following image. In this case, we are working with panoptic segmentation.

Image from MSCOCO (original) with panoptic mask + object detection boxes.

Here, we would construct 11 Segments to map each pixel value in the mask PNG to a class instance as follows:

| Pixel Value | Class Instance |

|---|---|

| 0 | Background |

| 1 | Building |

| 2 | Tree |

| 3 | Fence |

| 4 | Wall |

| 5 | Pavement |

| 6 | Person |

| 7 | Handbag |

| 8 | Bird 1 |

| 9 | Bird 2 |

| 10 | Bird 3 |

In code, this is as simple as:

from nucleus import Segment, SegmentationAnnotation

segmentation = SegmentationAnnotation(

mask_url="s3://your-bucket-name/segmentation-masks/image_2_mask_1.png",

annotations=[

Segment(label="background", index=0),

Segment(label="building", index=1),

Segment(label="tree", index=2),

Segment(label="wall", index=3),

Segment(label="fence", index=4),

Segment(label="pavement", index=5),

Segment(label="person", index=6, metadata={'pose': 'sitting'}),

Segment(label="handbag", index=7),

Segment(label="bird", index=8),

Segment(label="bird", index=9),

Segment(label="bird", index=10),

],

reference_id="image_2",

annotation_id="image_2_mask_1",

)

For instance segmentation, you might consolidate and only use segments:

- 0, 1, 2, 3, 4, 5: Background

- 6: Person

- 7: Handbag

- 8: Bird 1

- 9: Bird 2

- 10: Bird 3

For semantic segmentation, you might consolidate and only use segments:

- 0: Background

- 1: Building

- 2: Tree

- 3: Fence

- 4: Wall

- 5: Pavement

- 6: Person

- 7: Handbag

- 8, 9, 10: Bird

Updated almost 4 years ago