Image Dataset

Overview

In this guide, we'll walk through the steps to upload your 2D image data to Nucleus.

- Create

DatasetforDatasetItems - Create image

DatasetItems - Upload

DatasetItemstoDataset - Update metadata

Creating a Dataset

DatasetGet started by creating a new Dataset to which to upload your 2D data. If you do have an existing Dataset, you can retrieve it by its dataset ID. You can list information about all of your datasets using NucleusClient.datasets, or extract it from the Nucleus dashboard's URL upon clicking into the Dataset.

from nucleus import NucleusClient

client = NucleusClient(YOUR_API_KEY)

dataset = client.create_dataset(YOUR_DATASET_NAME)

Creating Image DatasetItems

DatasetItemsNext, we'll upload DatasetItems via API.

When uploading items via API, you'll first need to construct DatasetItem payloads. The best way to do so is using the Python SDK's DatasetItem constructor, which takes in a few parameters:

| Property | Type | Description |

|---|---|---|

| image_location | string (required) | Local path or remote URL to the image. For large uploads we require the data to be stored within AWS S3, Google Cloud Storage, or Azure Blob Storage for faster concurrent & asynchronous processing. See here for info on how to grant Scale access to your remote data. |

| reference_id | string (required) | A user-specified identifier for the image. Typically this is an internal filename or any unique, easily identifiable moniker. |

| metadata | dict | Optional metadata pertaining to the image, e.g. time of day, weather. These attributes will be queryable in the Nucleus platform. Metadata can be updated after uploading (via reference ID). |

| upload_to_scale | boolean | Set this to false in order to use privacy mode for this item. This means the data will not be uploaded to Scale, and the image_location must be a URL that is accessible to anyone who wants to see the images in Nucleus. Note: privacy mode is only available to enterprise customers. |

| pointcloud_location | string | This parameter should not be supplied for 2D items! DatasetItems can also house lidar pointclouds; please check out our 3D guide for more info. |

from nucleus import DatasetItem

accessible_urls = [

"http://farm1.staticflickr.com/107/309278012_7a1f67deaa_z.jpg",

"http://farm9.staticflickr.com/8001/7679588594_4e51b76472_z.jpg",

"http://farm6.staticflickr.com/5295/5465771966_76f9773af1_z.jpg",

"http://farm4.staticflickr.com/3449/4002348519_8ddfa4f2fb_z.jpg",

]

reference_ids = ['107', '8001', '5925', '3449']

metadata_dicts = [

{'indoors': True},

{'indoors': True},

{'indoors': False},

{'indoors': False},

]

dataset_items = []

for url, ref_id, metadata in zip(accessible_urls, reference_ids, metadata_dicts):

item = DatasetItem(image_location=url, reference_id=ref_id, metadata=metadata)

dataset_items.append(item)

Maximum Image Size

Note that your images must be at most 5,000px by 5,000px. For larger images, we recommend downsizing such that they fall within this size range.

Uploading DatasetItems to Nucleus

DatasetItems to NucleusWe'll upload to the Dataset created earlier in this guide. You can always retrieve a Dataset by its dataset ID. You can list all of your datasets' IDs using NucleusClient.datasets, or extract one from the Nucleus dashboard's URL upon clicking into the Dataset.

from nucleus import NucleusClient

client = NucleusClient(YOUR_API_KEY)

dataset = client.get_dataset(YOUR_DATASET_ID)

With your images and dataset ready, you can now upload to Nucleus using Dataset.append.

res = dataset.append(items=dataset_items)

Large Batch Uploads

When calling dataset.append, it is highly recommended to set asynchronous=True for larger uploads! In case of asynchronous uploads, the URLs for the dataset items need to be remote/cloud-based. The concurrent processing will dramatically increase upload throughput.

DatasetItem.image_locationmust be a remote URL (e.g. s3) in order to use asynchronous processing.

job = dataset.append(

items=dataset_items, # URLs must be remote for async uploads!

asynchronous=True

)

# async jobs will run in the background, poll using:

job.status()

# or block until job completion using:

job.sleep_until_complete()

Updating Metadata

By setting the update=True in Dataset.append, your upload will overwrite metadata for any existing item with a shared reference_id.

For instance, in our example we uploaded an item with reference ID 107. We can overwrite this item's metadata to include a new key-value pair as follows:

updated_item = DatasetItem(

image_location="http://farm1.staticflickr.com/107/309278012_7a1f67deaa_z.jpg",

reference_id="107",

metadata={"indoors": True, "room": "living room"}

)

job = dataset.append(

items=[updated_item],

update=True, # overwrite on reference ID collision

asynchronous=True

)

job.sleep_until_complete()

We highly recommend adding as much metadata as possible! Metadata is queryable in the Nucleus dashboard and can unlock many data exploration and curation workflows. See our metadata guide for more info.

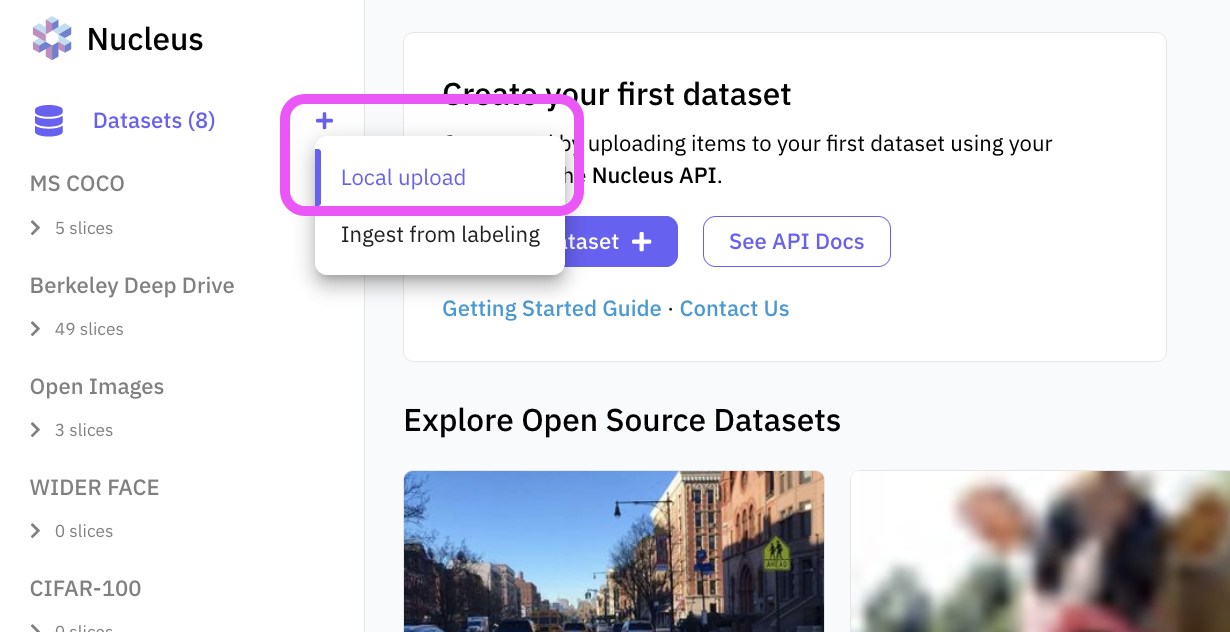

Dashboard Upload

Using the Nucleus dashboard, you can upload directly from disk or import an existing Scale or Rapid labeling project.

Uploading local files from the dashboard (creates a new Dataset).

Updated about 1 year ago